In one of my earlier blogs, I shared tips on how to build a Text-To-Speech application in Python, which you can read in the post: Interactive Text-To-Speech App…

In this post, I’ll show you the reverse…where we can take our voice, and even an audio-recording, and transcript them into English text. Once I do that, I will demonstrate how to send that converted text back into the Text-to-speech engine and have the computer read it back to me in a completely different voice (male/female, etc. and in computer-synthesized voice).

The concepts covered here are:

How to recognize speech via Mic, choose a mic programatically, show the Speech->Text results on screen and save to a MP3/WAV file.

— microphone/audio file-> text

How to also pass the converted text from Speech_recognition to Text_to_speech engine and speak it back in synthesized voice and also save that audio to a file…and play it back on demand.

— microphone/audio file-> text -> speech

The libraries I’ll use are: SpeechRecognition, and pyaudio

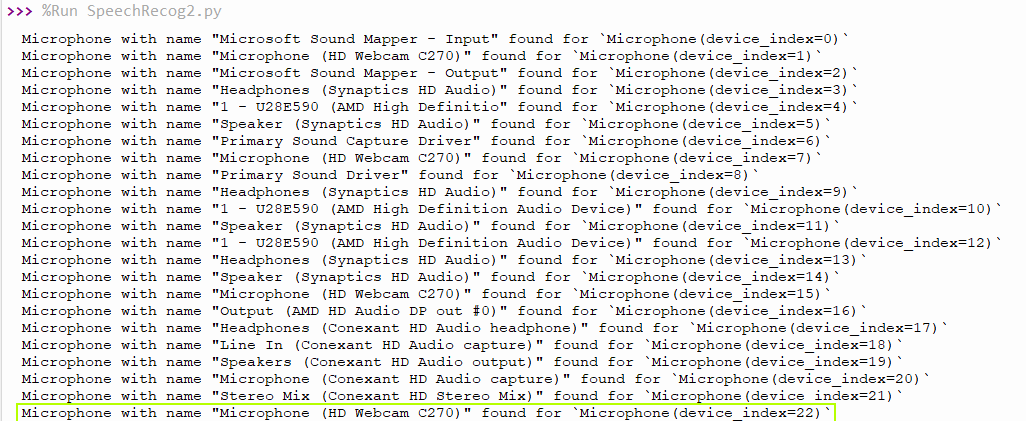

First, it’s good to check what input devices are on the system. If no specific one is specified, the system default will be used. To select a specific device, pass the device’s index value to optional param device_index as in: with sr.Microphone(device_index=0) as source:

So to use Webcam C270 mic, I’d code: with sr.Microphone(device_index=22) as source:

because that’s the index shown when we enumerated the devices using this code:

for index, name in enumerate(sr.Microphone.list_microphone_names()):

print("Microphone with name \"{1}\" found for `Microphone(device_index={0})`".format(index, name))

And its output on my system is:

Now we can import the library and create a recognizer object:

import speech_recognition as sr r = sr.Recognizer()

To capture the audio from the microphone, I write:

with sr.Microphone() as source:

print("Say something!")

audio = r.listen(source)

try:

data = r.recognize_google(audio)

print(data)

except:

print("Could not hear you. Please try again")

Also I saved the file as a MP3 with file.write(audio.get_wav_data()) methods.

Next, I’ll run the converted text above (not the audio) through a Text-To-Speech engine (as shown in my earlier blog) and convert that text generated by Speech Recognition engine to a new audio file. Except, this new audio will be using computer synthesized voice, not mine!

For TTS, we need to also import pyttsx3 library

engine = pyttsx3.init() # create object

rate = engine.getProperty('rate')

engine.setProperty('rate',180)

voices = engine.getProperty('voices')

engine.setProperty('voice', voices[1].id)

The list of voices is available as: index 0 for male, 1 for female. default is male if no voice property specified.

I also set the speaking rate to 180 which seems more natural from its default 200.

Example

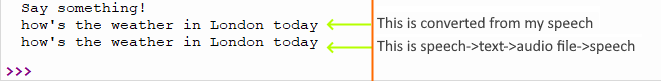

In this example, first I spoke to the mic this sentence: “How’s the weather in London today?”

I print out on screen (in addition to saving the files described above) the outputs from the application stages: Mic->Text and then at Mic-Text->Speech

The screen output is below (I’m adding the arrows to indicate which output came from which source):

I also saved my original spoken sentence as captured by the program, and you can hear it below:

As well as the audio that was computer-generated to speak back (using female voice):

The accuracy of audio->text and text->audio was accurate (the tonation is not as good as Alexa’s or Siri’s but more on that later)! As these libraries continue to improve, the application will automatically improve as I use the latest version without me having to worry about the nitty gritty details.

As a logical next step, you can infer that you can extend this to actually answer the question asked! With these capabilities explained above without having to use high-powered cognitive services, we can extend this app to parse the transcript and take conditional actions, such as fetching the weather, time, stock prices, Wikipedia results, images…anything that exposes their API through a webservice. I’ve already shown in earlier posts how to get those data (e.g. Weather, Time, Air-quality, etc.), so we have all the building blocks to construct a basic interactive speech-driven application like Alexa or Siri. For world-class accuracy and scale, you’ll have to cognitive web services, and then get the data/results back in text and then convert it back to voice like Siri or Alexa do. That’s something more of a larger undertaking…this is where Apple (Siri), Amazon (Alexa), and Microsoft, IBM, and many other companies spent and continues to spend billions of $$$ in AI and speech recognition and makes LOTs of money 🙂