The modern AI ecosystem isn’t just a race for model supremacy; it’s a dense web of investments, chip dependencies, and cloud entanglements. Some of the biggest players are simultaneously competitors, collaborators, and customers. The relationships are intricate, often opaque, and increasingly circular.

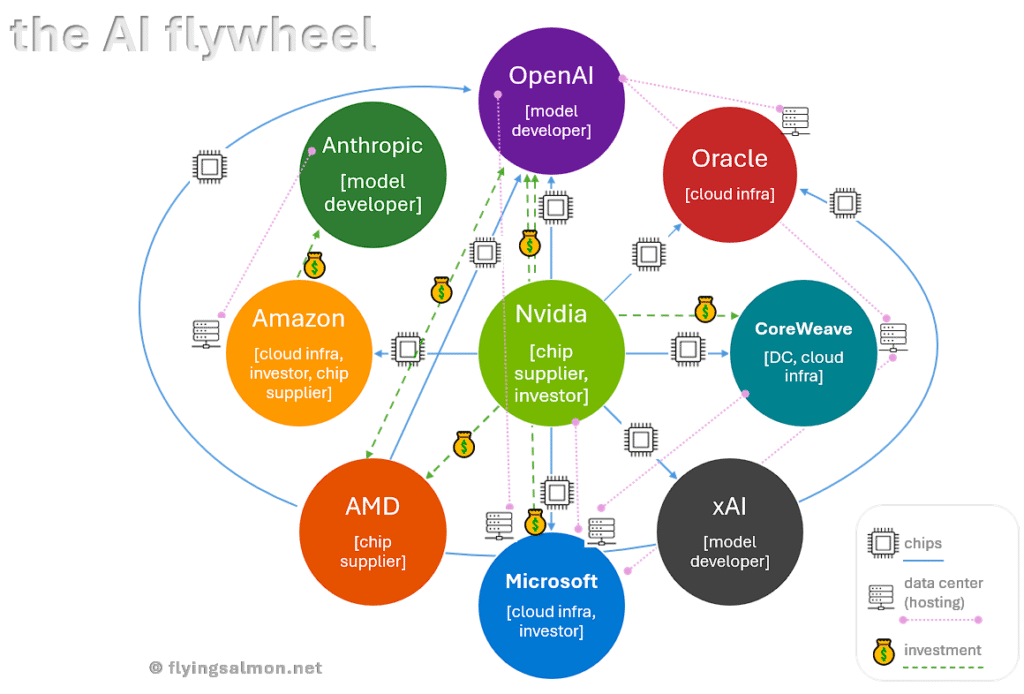

Take Nvidia, for example: it invests in OpenAI, which in turn buys Nvidia chips. Nvidia also backs CoreWeave, a cloud provider that rents GPU capacity to OpenAI and Microsoft; both of whom buy Nvidia hardware. Meanwhile, Oracle builds data centers for OpenAI using Nvidia chips, and AMD gives OpenAI equity warrants worth $34B, incentivizing chip purchases from both AMD and Nvidia.

These entities are not just trading chips; they’re trading influence. Microsoft has invested $13B in OpenAI, licenses its models, and hosts them on Azure. Amazon, while building its own AI chips (Trainium and Inferentia), invested $4B in Anthropic, which runs on AWS infrastructure; often powered by Nvidia GPUs. Even xAI, Elon Musk’s model developer, buys Nvidia chips via special-purpose vehicles and hosts on Azure and Oracle.

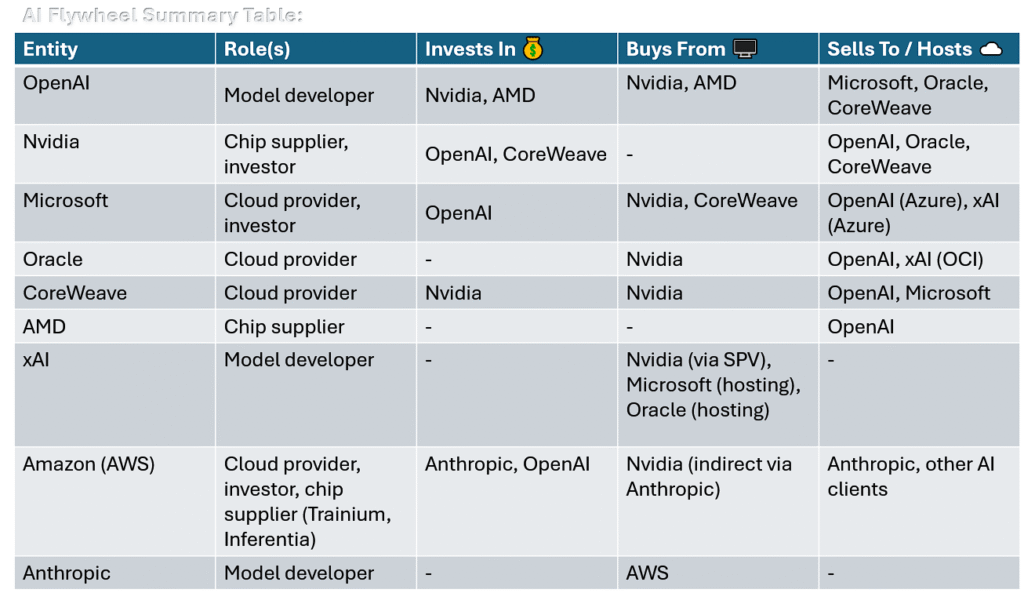

To summarize…

In this ecosystem:

•Partners are competitors: Microsoft and Amazon both host frontier models, but compete in cloud and chip strategy.

•Chipmakers are investors and suppliers: Nvidia and AMD invest in OpenAI, which commits to buying their chips.

•Cloud providers are customers and landlords: CoreWeave and Oracle buy Nvidia chips, then rent GPU capacity to Nvidia-backed tenants.

Billions of dollars flow in and out, often through equity stakes, warrants, or opaque hosting deals. The full picture is hard to track; many arrangements are private, and disclosures are partial at best.

Notably absent from this matrix are Apple and Google. Apple’s AI strategy remains largely device-centric and closed-loop, while Google builds and hosts its own models (Gemini) on Google Cloud, making it self-contained and less entangled or involved in external dependencies.

Visualization: Nvidia-Centric Circularity

The radial diagram above places Nvidia at the center, with directional arrows and lines showing investment flows, chip purchases, and hosting relationships. It captures how capital, compute, and strategic leverage circulate among OpenAI, Microsoft, CoreWeave, Oracle, AMD, xAI, Amazon, and Anthropic. The light gray dotted line with diamond tips shows software licensing relationship (e.g. Microsoft licenses OpenAI software).

As of now, there is no direct commercial or investment relationship between Anthropic and Nvidia; and in fact, they’ve recently found themselves on opposite sides of a policy debate. Anthropic does not buy Nvidia chips directly; instead, it uses AWS infrastructure, which includes both Amazon’s custom chips (Trainium, Inferentia) and Nvidia GPUs provisioned by AWS. While Anthropic doesn’t transact directly with Nvidia, it relies on Nvidia hardware via AWS (Anthropic → AWS → Nvidia).

Update: Oracle just announced that it will use AMD chips (MI450). OpenAI also announced last week that it’ll deploy AMD chips, and OpenAI is investing in AMD (via warrants). Radial diagram has been updated accordingly.